OpenCV图像缩放变换——OpenCV从入门到实战(六)

1 理论与概念

1.1 几何变换

几何变换可以看成图像中物体(或像素)空间位置改变,或者说是像素的移动。

几何运算需要空间变换和灰度级差值两个步骤的算法,像素通过变换映射到新的坐标位置,新的位置可能是在几个像素之间,即不一定为整数坐标。

这时就需要灰度级差值将映射的新坐标匹配到输出像素之间。最简单的插值方法是最近邻插值,就是令输出像素的灰度值等于映射最近的位置像素,该方法可能会产生锯齿。这种方法也叫零阶插值,相应比较复杂的还有一阶和高阶插值。

插值算法感觉只要了解就可以了,图像处理中比较需要理解的还是空间变换。

1.2 空间变换

10. OpenCV中的图像变换函数

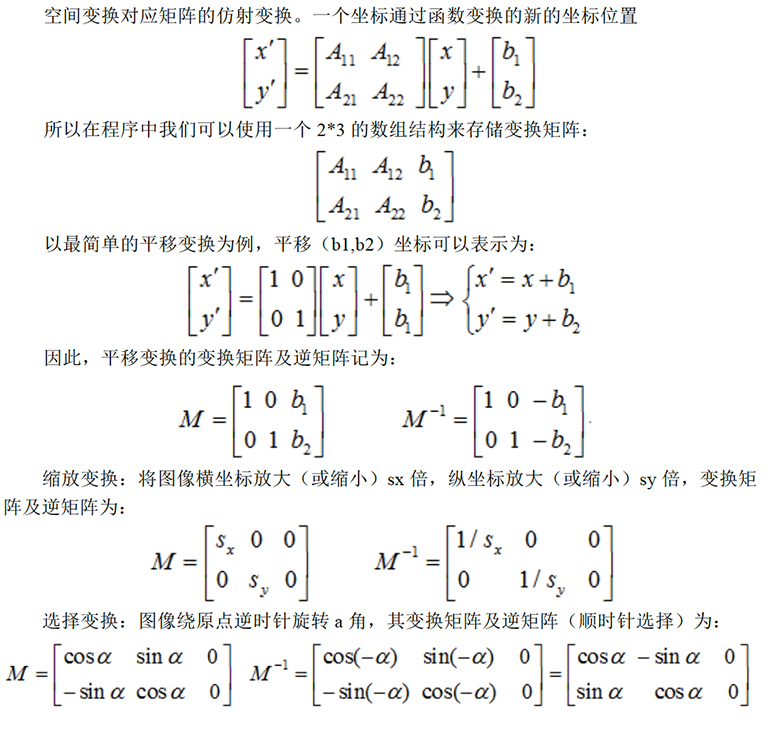

1.3 变换矩阵

Mat getRotationMatrix2D( Point2f center, double angle, double scale );

1.4 仿射变换

void warpAffine( InputArray src, OutputArray dst,

InputArray M, Size dsize,

int flags=INTER_LINEAR,

int borderMode=BORDER_CONSTANT,

const Scalar& borderValue=Scalar());

11. OpenCV应用

1.5 仿射变换warpAffine()

void COpenCVTestDlg::OnBnClickedButtonRotateScale2()

{

//http://blog.csdn.net/icvpr/article/details/8502929

cv::Mat image = cv::imread("TestImage.bmp");

if (image.empty()) return;

cv::Point2f center = cv::Point2f(image.cols*0.3, image.rows*0.8); // 旋转中心

double angle = 30; // 旋转角度

double scale = 0.5; // 缩放尺度

cv::Mat rotateMat;

rotateMat = cv::getRotationMatrix2D(center, angle, scale);

cv::Mat rotateImg;

cv::warpAffine(image, rotateImg, rotateMat, image.size());

namedWindow("原始图");

namedWindow("输出");

//显示原始图

imshow("原始图", image);

imshow("输出", rotateImg);

}

1.6 仿射变换warpAffine()_进阶

常常需要最图像进行仿射变换,仿射变换后,我们可能需要将原来图像中的特征点坐标进行重新计算,获得原来图像中例如眼睛瞳孔坐标的新的位置,用于在新得到图像中继续利用瞳孔位置坐标。http://www.tuicool.com/articles/RZz2Eb

1.6.1 特征点对应的新的坐标计算

假设已经有一个原图像中的特征点的坐标 CvPoint point; 那么计算这个point的对应的仿射变换之后在新的图像中的坐标位置,使用的方法如下函数:

CvPoint getPointAffinedPos(const CvPoint &src, const CvPoint center, double angle)

{// 获取指定像素点放射变换后的新的坐标位置

CvPoint dst;

int x = src.x - center.x;

int y = src.y - center.y;

dst.x = cvRound(x * cos(angle) + y * sin(angle) + center.x);

dst.y = cvRound(-x * sin(angle) + y * cos(angle) + center.y);

return dst;

}

要特别注意的是,在对一个原图像中的像素的坐标进行计算仿射变换之后的坐标的时候,一定要按照仿射变换的基本原理,将原来的坐标减去仿射变换的旋转中心的坐标,这样仿射变换之后得到的坐标再加上仿射变换旋转中心坐标才是原坐标在新的仿射变换之后的图像中的正确坐标。

下面给出计算对应瞳孔坐标旋转之后的坐标位置的示例代码:

Mat ImageRotate(Mat & src, const CvPoint &_center, double angle)

{

CvPoint2D32f center;

center.x = float(_center.x);

center.y = float(_center.y);

//计算二维旋转的仿射变换矩阵

Mat M = getRotationMatrix2D(center, angle, 1);

// rotate

Mat dst;

warpAffine(src, dst, M, cvSize(src.cols, src.rows), CV_INTER_LINEAR);

return dst;

}

void COpenCVTestDlg::OnBnClickedButtonAffine()

{

string image_path = "lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

CvPoint center;

center.x = img.cols / 2;

center.y = img.rows / 2;

double angle = 15L;

Mat dst = ImageRotate(img, center, angle);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(Leye, center, angle * CV_PI / 180);

CvPoint r2 = getPointAffinedPos(Reye, center, angle * CV_PI / 180);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

//Mat dst = ImageRotate2NewSize(img, center, angle);

imshow("dst", dst);

waitKey(0);

}

这里,我们先通过手工找到瞳孔坐标,然后计算在图像旋转之后瞳孔的坐标。

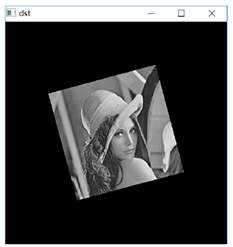

1.6.2 旋转中心对于旋转的影响

然后我们看看仿射变换旋转点的选择对于旋转之后的图像的影响,一般情况下,我们选择图像的中心点作为仿射变换的旋转中心,获得的旋转之后的图像与原图像大小一样。

计算代码:

void COpenCVTestDlg::OnBnClickedButtonAffine2()

{

string image_path = "lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

CvPoint center;

//center.x = img.cols / 2;

//center.y = img.rows / 2;

center.x = 0;

center.y = 0;

double angle = 15L;

Mat dst = ImageRotate(img, center, angle);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(Leye, center, angle * CV_PI / 180);

CvPoint r2 = getPointAffinedPos(Reye, center, angle * CV_PI / 180);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

//Mat dst = ImageRotate2NewSize(img, center, angle);

imshow("dst", dst);

waitKey(0);

}

1.6.3 缩放因子对于旋转图像的影响

上面我们的代码都没有添加缩放信息,现在对上面的代码进行稍加修改,添加缩放参数,然后看一下如何计算对应的新的坐标。

CvPoint getPointAffinedPos(const CvPoint &src, const CvPoint center, double angle, double scale)

{// 获取指定像素点放射变换后的新的坐标位置

CvPoint dst;

int x = src.x - center.x;

int y = src.y - center.y;

dst.x = cvRound(x * cos(angle) * scale + y * sin(angle) * scale + center.x);

dst.y = cvRound(-x * sin(angle) * scale + y * cos(angle) * scale + center.y);

return dst;

}

Mat ImageRotate(Mat & src, const CvPoint &_center, double angle, double scale)

{

CvPoint2D32f center;

center.x = float(_center.x);

center.y = float(_center.y);

//计算二维旋转的仿射变换矩阵

Mat M = getRotationMatrix2D(center, angle, scale);

// rotate

Mat dst;

warpAffine(src, dst, M, cvSize(src.cols, src.rows), CV_INTER_LINEAR);

return dst;

}

void COpenCVTestDlg::OnBnClickedButtonAffine3()

{

string image_path = "lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

double scale = 0.5;

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

CvPoint center;

center.x = img.cols / 2;

center.y = img.rows / 2;

double angle = 15L;

Mat dst = ImageRotate(img, center, angle, scale);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(Leye, center, angle * CV_PI / 180, scale);

CvPoint r2 = getPointAffinedPos(Reye, center, angle * CV_PI / 180, scale);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

//Mat dst = ImageRotate2NewSize(img, center, angle);

imshow("dst", dst);

waitKey(0);

}

1.6.4 重新计算生成的图像大小

上面的计算中,一直都是放射变换之后计算得到的图像和原始图像一样大,但是因为旋转、缩放之后图像可能会变大或者变小,我们再次对上面的代码进行修改,这样在获得仿射变换之后的图像前,需要重新计算生成的图像的大小。计算方法:

CvPoint getPointAffinedPos(Mat & src, Mat & dst, const CvPoint &src_p, const CvPoint center, double angle, double scale)

{ // 获取指定像素点放射变换后的新的坐标位置

double alpha = cos(angle) * scale;

double beta = sin(angle) * scale;

int width = src.cols;

int height = src.rows;

CvPoint dst_p;

int x = src_p.x - center.x;

int y = src_p.y - center.y;

dst_p.x = cvRound(x * alpha + y * beta + center.x);

dst_p.y = cvRound(-x * beta + y * alpha + center.y);

int new_width = dst.cols;

int new_height = dst.rows;

int movx = (int)((new_width - width)/2);

int movy = (int)((new_height - height)/2);

dst_p.x += movx;

dst_p.y += movy;

return dst_p;

}

Mat ImageRotate2NewSize(Mat& src, const CvPoint &_center, double angle, double scale)

{

double angle2 = angle * CV_PI / 180;

int width = src.cols;

int height = src.rows;

double alpha = cos(angle2) * scale;

double beta = sin(angle2) * scale;

int new_width = (int)(width * fabs(alpha) + height * fabs(beta));

int new_height = (int)(width * fabs(beta) + height * fabs(alpha));

CvPoint2D32f center;

center.x = float(width / 2);

center.y = float(height / 2);

//计算二维旋转的仿射变换矩阵

Mat M = getRotationMatrix2D(center, angle, scale);

// 给计算得到的旋转矩阵添加平移

M.at<double>(0, 2) += (int)((new_width - width )/2);

M.at<double>(1, 2) += (int)((new_height - height )/2);

// rotate

Mat dst;

warpAffine(src, dst, M, cvSize(new_width, new_height), CV_INTER_LINEAR);

return dst;

}

void COpenCVTestDlg::OnBnClickedButtonAffine4()

{

string image_path = "lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

double scale = 0.5;

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

//

CvPoint center;

center.x = img.cols / 2;

center.y = img.rows / 2;

double angle = 15L;

//Mat dst = ImageRotate(img, center, angle, scale);

Mat dst = ImageRotate2NewSize(img, center, angle, scale);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(src, dst, Leye, center, angle * CV_PI / 180, scale);

CvPoint r2 = getPointAffinedPos(src, dst, Reye, center, angle * CV_PI / 180, scale);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

imshow("dst", dst);

waitKey(0);

}

联系方式

邮箱:david.lu@lontry.cn

电话:0755-29952252, 陆工:15999607370

QQ:457841768

完整文章下载